2.7 - Vanishing gradients#

!wget -nc --no-cache -O init.py -q https://raw.githubusercontent.com/rramosp/2021.deeplearning/main/content/init.py

import init; init.init(force_download=False);

/content/init.py:2: SyntaxWarning: invalid escape sequence '\S'

course_id = '\S*deeplearning\S*'

replicating local resources

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from IPython.display import Image

%matplotlib inline

import tensorflow as tf

tf.__version__

'2.19.0'

forward/back propagation calculations https://medium.com/@14prakash/back-propagation-is-very-simple-who-made-it-complicated-97b794c97e5c

Vanishing gradient example: harinisuresh/VanishingGradient

https://adventuresinmachinelearning.com/vanishing-gradient-problem-tensorflow/

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

from tensorflow import keras

%matplotlib inline

Visualizing and understanding vanishing gradients#

Make sure you understand well the backpropagation algorithm. You may perform by hand the calculations as illustrated here to consolidate your understanding.

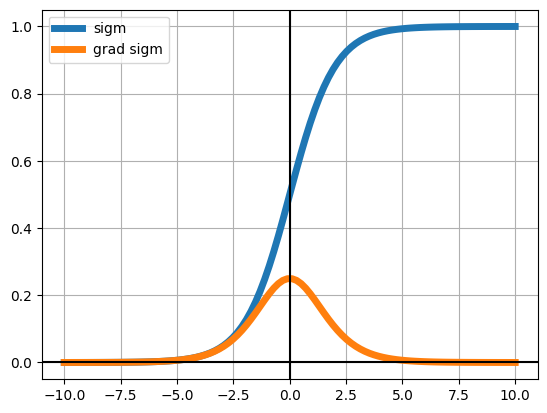

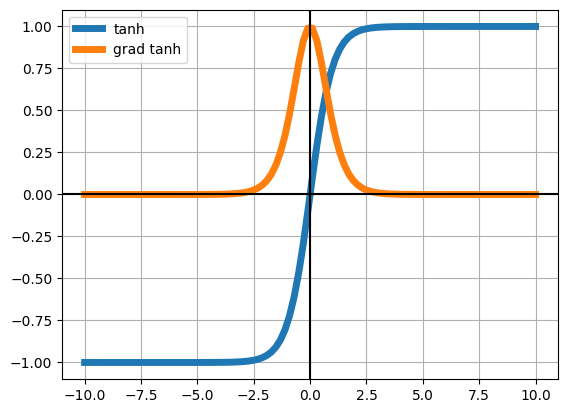

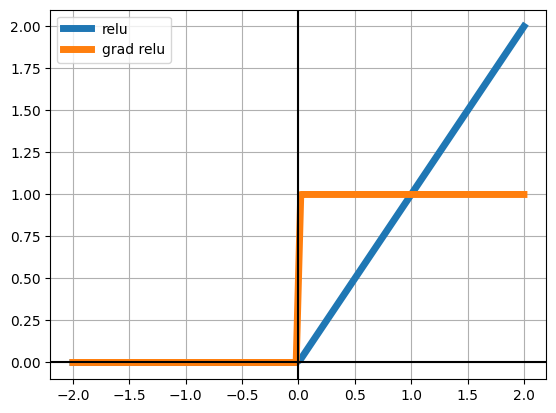

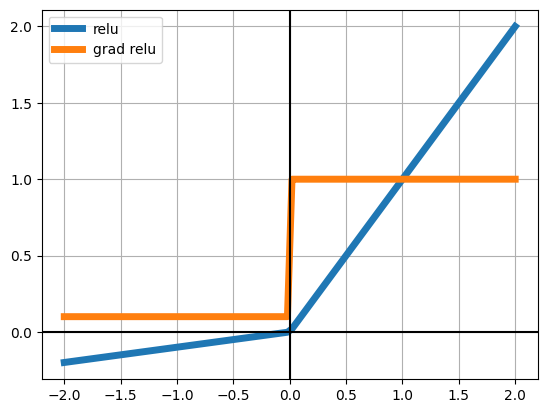

We will be using three activation functions. Observe under which what values each function’s gradient becomes negligible (very near zero)

sigmoid#

z = np.linspace(-10,10,100)

sigm = lambda z: 1/(1+np.exp(-z))

dsigm = lambda z: sigm(z)*(1-sigm(z))

plt.plot(z, sigm(z), lw=5, label="sigm")

plt.plot(z, dsigm(z), lw=5, label="grad sigm")

plt.grid()

plt.axvline(0, color="black");

plt.axhline(0, color="black");

plt.legend()

<matplotlib.legend.Legend at 0x7f36197145f0>

tanh#

z = np.linspace(-10,10,100)

tanh = lambda z: (np.exp(z)-np.exp(-z))/(np.exp(z)+np.exp(-z))

dtanh = lambda z: 1 - tanh(z)**2

plt.plot(z, tanh(z), lw=5, label="tanh")

plt.plot(z, dtanh(z), lw=5, label="grad tanh")

plt.grid()

plt.axvline(0, color="black");

plt.axhline(0, color="black");

plt.legend()

<matplotlib.legend.Legend at 0x7f36197690a0>

ReLU (Rectified Linear Unit)#

z = np.linspace(-2,2,100)

relu = np.vectorize(lambda z: z if z>0 else 0.)

drelu = np.vectorize(lambda z: 1 if z>0 else 0.)

plt.plot(z, relu(z), lw=5, label="relu")

plt.plot(z, drelu(z), lw=5, label="grad relu")

plt.grid()

plt.axvline(0, color="black");

plt.axhline(0, color="black");

plt.legend()

<matplotlib.legend.Legend at 0x7f36197d90a0>

Leaky ReLU (Rectified Linear Unit)#

z = np.linspace(-2,2,100)

relu = np.vectorize(lambda z: z if z>0 else .1*z)

drelu = np.vectorize(lambda z: 1 if z>0 else .1)

plt.plot(z, relu(z), lw=5, label="relu")

plt.plot(z, drelu(z), lw=5, label="grad relu")

plt.grid();

plt.axvline(0, color="black");

plt.axhline(0, color="black");

plt.legend()

<matplotlib.legend.Legend at 0x7f36176a0320>

load sample MNIST data as customary#

mnist = pd.read_csv("local/data/mnist1.5k.csv.gz", compression="gzip", header=None).values

X=mnist[:,1:785]/255.

y=mnist[:,0]

print("dimension de las imagenes y las clases", X.shape, y.shape)

dimension de las imagenes y las clases (1500, 784) (1500,)

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=.2)

X_train = X_train

X_test = X_test

y_train_oh = np.eye(10)[y_train]

y_test_oh = np.eye(10)[y_test]

print(X_train.shape, y_train_oh.shape)

(1200, 784) (1200, 10)

from tensorflow.keras import Sequential, Model

from tensorflow.keras.layers import Dense, Dropout, Flatten, concatenate, Input

from tensorflow.keras.backend import clear_session

from tensorflow import keras

A basic multi layered dense model#

observe that the function allows us to parametrize the number of hidden layers and their activation function

!rm -rf log

def get_model(input_dim=784, output_dim=10, num_hidden_layers=6, hidden_size=10, activation="relu"):

model = Sequential()

model.add(Dense(hidden_size, activation=activation, input_dim=input_dim, name="Layer_%02d_Input"%(0)))

for i in range(num_hidden_layers):

model.add(Dense(hidden_size, activation=activation, name="Layer_%02d_Hidden"%(i+1)))

model.add(Dense(output_dim, activation="softmax", name="Layer_%02d_Output"%(num_hidden_layers+1)))

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

return model

SIGMOID activation#

model = get_model(num_hidden_layers=10, activation="sigmoid")

!rm -rf log/sigmoid

tb_callback = keras.callbacks.TensorBoard(log_dir='./log/sigmoid', histogram_freq=1, write_graph=True, write_images=True)

model.fit(X_train, y_train_oh, epochs=30, batch_size=32, validation_data=(X_test, y_test_oh), callbacks=[tb_callback])

/usr/local/lib/python3.12/dist-packages/keras/src/layers/core/dense.py:93: UserWarning: Do not pass an `input_shape`/`input_dim` argument to a layer. When using Sequential models, prefer using an `Input(shape)` object as the first layer in the model instead.

super().__init__(activity_regularizer=activity_regularizer, **kwargs)

Epoch 1/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 5s 23ms/step - accuracy: 0.1054 - loss: 2.3731 - val_accuracy: 0.1067 - val_loss: 2.3696

Epoch 2/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 2s 13ms/step - accuracy: 0.1028 - loss: 2.3353 - val_accuracy: 0.1067 - val_loss: 2.3355

Epoch 3/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 14ms/step - accuracy: 0.0926 - loss: 2.3204 - val_accuracy: 0.1067 - val_loss: 2.3203

Epoch 4/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.1052 - loss: 2.3042 - val_accuracy: 0.1233 - val_loss: 2.3130

Epoch 5/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - accuracy: 0.1037 - loss: 2.3028 - val_accuracy: 0.1233 - val_loss: 2.3093

Epoch 6/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.1215 - loss: 2.3017 - val_accuracy: 0.1233 - val_loss: 2.3053

Epoch 7/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.1330 - loss: 2.2979 - val_accuracy: 0.1233 - val_loss: 2.3039

Epoch 8/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.1190 - loss: 2.3007 - val_accuracy: 0.1233 - val_loss: 2.3030

Epoch 9/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.1241 - loss: 2.2997 - val_accuracy: 0.1233 - val_loss: 2.3019

Epoch 10/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.1261 - loss: 2.2991 - val_accuracy: 0.1233 - val_loss: 2.3024

Epoch 11/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.1299 - loss: 2.2960 - val_accuracy: 0.1233 - val_loss: 2.3018

Epoch 12/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.1386 - loss: 2.2974 - val_accuracy: 0.1233 - val_loss: 2.3017

Epoch 13/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 14ms/step - accuracy: 0.1185 - loss: 2.2999 - val_accuracy: 0.1233 - val_loss: 2.3021

Epoch 14/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 20ms/step - accuracy: 0.1196 - loss: 2.2992 - val_accuracy: 0.1233 - val_loss: 2.3008

Epoch 15/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 19ms/step - accuracy: 0.1262 - loss: 2.2959 - val_accuracy: 0.1233 - val_loss: 2.3022

Epoch 16/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 18ms/step - accuracy: 0.1383 - loss: 2.2975 - val_accuracy: 0.1233 - val_loss: 2.3000

Epoch 17/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - accuracy: 0.1224 - loss: 2.3021 - val_accuracy: 0.1233 - val_loss: 2.3014

Epoch 18/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.1309 - loss: 2.2959 - val_accuracy: 0.1233 - val_loss: 2.3023

Epoch 19/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.1255 - loss: 2.2970 - val_accuracy: 0.1233 - val_loss: 2.3013

Epoch 20/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.1314 - loss: 2.2937 - val_accuracy: 0.1233 - val_loss: 2.3016

Epoch 21/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.1175 - loss: 2.2988 - val_accuracy: 0.1233 - val_loss: 2.3016

Epoch 22/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - accuracy: 0.1213 - loss: 2.2989 - val_accuracy: 0.1233 - val_loss: 2.3007

Epoch 23/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.1429 - loss: 2.2939 - val_accuracy: 0.1233 - val_loss: 2.3005

Epoch 24/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.1247 - loss: 2.2984 - val_accuracy: 0.1233 - val_loss: 2.3018

Epoch 25/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.1363 - loss: 2.2947 - val_accuracy: 0.1233 - val_loss: 2.3022

Epoch 26/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.1287 - loss: 2.2956 - val_accuracy: 0.1233 - val_loss: 2.3010

Epoch 27/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.1320 - loss: 2.2975 - val_accuracy: 0.1233 - val_loss: 2.3010

Epoch 28/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.1205 - loss: 2.3009 - val_accuracy: 0.1233 - val_loss: 2.3007

Epoch 29/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.1338 - loss: 2.2950 - val_accuracy: 0.1233 - val_loss: 2.3016

Epoch 30/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - accuracy: 0.1216 - loss: 2.2993 - val_accuracy: 0.1233 - val_loss: 2.3011

<keras.src.callbacks.history.History at 0x7f3615ceb3b0>

RELU activation#

model = get_model(num_hidden_layers=10, activation="relu")

!rm -rf log/relu

tb_callback = keras.callbacks.TensorBoard(log_dir='./log/relu', histogram_freq=1, write_graph=True, write_images=True)

model.fit(X_train, y_train_oh, epochs=30, batch_size=32, validation_data=(X_test, y_test_oh), callbacks=[tb_callback])

Epoch 1/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 4s 20ms/step - accuracy: 0.0761 - loss: 2.3020 - val_accuracy: 0.1467 - val_loss: 2.2875

Epoch 2/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.1180 - loss: 2.2585 - val_accuracy: 0.2067 - val_loss: 2.1309

Epoch 3/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.2003 - loss: 2.0828 - val_accuracy: 0.2200 - val_loss: 2.0145

Epoch 4/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.2092 - loss: 1.9246 - val_accuracy: 0.2000 - val_loss: 1.9175

Epoch 5/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.2034 - loss: 1.8308 - val_accuracy: 0.2167 - val_loss: 1.8493

Epoch 6/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.2405 - loss: 1.7564 - val_accuracy: 0.2733 - val_loss: 1.7784

Epoch 7/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.2829 - loss: 1.6109 - val_accuracy: 0.3433 - val_loss: 1.7027

Epoch 8/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.3451 - loss: 1.4697 - val_accuracy: 0.3833 - val_loss: 1.6274

Epoch 9/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.4028 - loss: 1.3674 - val_accuracy: 0.4367 - val_loss: 1.6473

Epoch 10/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.3966 - loss: 1.3169 - val_accuracy: 0.4067 - val_loss: 1.6630

Epoch 11/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 18ms/step - accuracy: 0.4123 - loss: 1.2316 - val_accuracy: 0.4367 - val_loss: 1.6200

Epoch 12/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 18ms/step - accuracy: 0.4763 - loss: 1.1386 - val_accuracy: 0.5067 - val_loss: 1.7163

Epoch 13/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 22ms/step - accuracy: 0.5058 - loss: 1.1010 - val_accuracy: 0.4967 - val_loss: 1.6629

Epoch 14/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.5511 - loss: 1.0193 - val_accuracy: 0.4967 - val_loss: 1.6722

Epoch 15/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.5650 - loss: 1.0143 - val_accuracy: 0.5200 - val_loss: 1.5476

Epoch 16/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.5950 - loss: 0.9485 - val_accuracy: 0.5200 - val_loss: 1.6630

Epoch 17/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.6264 - loss: 0.9099 - val_accuracy: 0.5267 - val_loss: 1.6491

Epoch 18/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.5965 - loss: 0.9193 - val_accuracy: 0.5633 - val_loss: 1.5708

Epoch 19/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.6360 - loss: 0.8918 - val_accuracy: 0.5200 - val_loss: 1.6918

Epoch 20/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.6560 - loss: 0.8420 - val_accuracy: 0.5567 - val_loss: 1.6657

Epoch 21/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.6177 - loss: 0.9001 - val_accuracy: 0.5700 - val_loss: 1.7047

Epoch 22/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.6718 - loss: 0.8106 - val_accuracy: 0.5767 - val_loss: 1.7745

Epoch 23/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.6374 - loss: 0.8486 - val_accuracy: 0.5800 - val_loss: 1.6881

Epoch 24/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.6945 - loss: 0.7626 - val_accuracy: 0.6133 - val_loss: 1.6876

Epoch 25/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - accuracy: 0.7263 - loss: 0.7405 - val_accuracy: 0.5833 - val_loss: 1.7602

Epoch 26/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.7265 - loss: 0.7421 - val_accuracy: 0.6100 - val_loss: 1.8384

Epoch 27/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.7126 - loss: 0.7803 - val_accuracy: 0.6033 - val_loss: 1.8377

Epoch 28/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.7535 - loss: 0.7120 - val_accuracy: 0.5967 - val_loss: 1.8472

Epoch 29/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.7638 - loss: 0.6707 - val_accuracy: 0.6133 - val_loss: 1.9387

Epoch 30/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.7216 - loss: 0.7566 - val_accuracy: 0.6233 - val_loss: 1.8856

<keras.src.callbacks.history.History at 0x7f35edb6ab10>

Leaky RELU activation#

import tensorflow as tf

model = get_model(num_hidden_layers=10, activation=tf.nn.leaky_relu)

!rm -rf log/leaky_relu

tb_callback = keras.callbacks.TensorBoard(log_dir='./log/leaky_relu', histogram_freq=1, write_graph=True, write_images=True)

model.fit(X_train, y_train_oh, epochs=30, batch_size=32, validation_data=(X_test, y_test_oh), callbacks=[tb_callback])

Epoch 1/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 4s 21ms/step - accuracy: 0.1153 - loss: 2.3002 - val_accuracy: 0.1833 - val_loss: 2.2727

Epoch 2/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.2004 - loss: 2.2264 - val_accuracy: 0.2167 - val_loss: 2.0627

Epoch 3/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - accuracy: 0.2128 - loss: 2.0220 - val_accuracy: 0.2200 - val_loss: 2.0331

Epoch 4/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.2566 - loss: 1.8607 - val_accuracy: 0.2667 - val_loss: 1.8322

Epoch 5/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.3321 - loss: 1.6833 - val_accuracy: 0.2867 - val_loss: 1.7486

Epoch 6/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.3403 - loss: 1.5454 - val_accuracy: 0.3200 - val_loss: 1.6454

Epoch 7/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - accuracy: 0.3849 - loss: 1.4163 - val_accuracy: 0.3333 - val_loss: 1.5965

Epoch 8/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.3988 - loss: 1.3185 - val_accuracy: 0.3500 - val_loss: 1.6274

Epoch 9/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.4312 - loss: 1.2683 - val_accuracy: 0.4733 - val_loss: 1.4862

Epoch 10/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.5286 - loss: 1.1724 - val_accuracy: 0.4267 - val_loss: 1.6519

Epoch 11/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 17ms/step - accuracy: 0.5403 - loss: 1.1548 - val_accuracy: 0.4767 - val_loss: 1.5348

Epoch 12/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 21ms/step - accuracy: 0.6052 - loss: 1.0305 - val_accuracy: 0.4767 - val_loss: 1.5166

Epoch 13/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 20ms/step - accuracy: 0.6104 - loss: 0.9848 - val_accuracy: 0.5367 - val_loss: 1.4080

Epoch 14/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.6407 - loss: 0.9210 - val_accuracy: 0.4900 - val_loss: 1.6197

Epoch 15/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 14ms/step - accuracy: 0.6302 - loss: 0.8917 - val_accuracy: 0.5100 - val_loss: 1.7791

Epoch 16/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.6345 - loss: 0.9043 - val_accuracy: 0.6100 - val_loss: 1.3989

Epoch 17/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.6641 - loss: 0.8482 - val_accuracy: 0.5733 - val_loss: 1.5803

Epoch 18/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.7033 - loss: 0.7594 - val_accuracy: 0.5500 - val_loss: 1.5795

Epoch 19/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.7261 - loss: 0.7307 - val_accuracy: 0.6167 - val_loss: 1.5252

Epoch 20/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.7317 - loss: 0.7193 - val_accuracy: 0.6167 - val_loss: 1.6626

Epoch 21/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.8123 - loss: 0.5962 - val_accuracy: 0.6033 - val_loss: 1.8928

Epoch 22/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.7938 - loss: 0.6043 - val_accuracy: 0.6000 - val_loss: 1.8191

Epoch 23/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 12ms/step - accuracy: 0.7695 - loss: 0.6307 - val_accuracy: 0.6100 - val_loss: 1.8240

Epoch 24/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.7817 - loss: 0.6184 - val_accuracy: 0.6267 - val_loss: 1.7866

Epoch 25/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.8311 - loss: 0.5729 - val_accuracy: 0.6567 - val_loss: 1.8094

Epoch 26/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 12ms/step - accuracy: 0.8459 - loss: 0.4978 - val_accuracy: 0.5933 - val_loss: 2.1872

Epoch 27/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.8579 - loss: 0.4858 - val_accuracy: 0.6567 - val_loss: 2.0357

Epoch 28/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.8561 - loss: 0.4508 - val_accuracy: 0.6367 - val_loss: 2.2305

Epoch 29/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 1s 13ms/step - accuracy: 0.8440 - loss: 0.4778 - val_accuracy: 0.6400 - val_loss: 2.1412

Epoch 30/30

38/38 ━━━━━━━━━━━━━━━━━━━━ 0s 13ms/step - accuracy: 0.8460 - loss: 0.4737 - val_accuracy: 0.6367 - val_loss: 2.0085

<keras.src.callbacks.history.History at 0x7f360e0ccec0>

SIGMOID activation but longer run (epochs)#

model = get_model(num_hidden_layers=10, activation="sigmoid")

!rm -rf log/sigmoid_longrun

tb_callback = keras.callbacks.TensorBoard(log_dir='./log/sigmoid_longrun', histogram_freq=1, write_graph=True, write_images=True)

model.fit(X_train, y_train_oh, epochs=300, batch_size=32, validation_data=(X_test, y_test_oh), callbacks=[tb_callback])

Train on 1200 samples, validate on 300 samples

Epoch 1/300

1200/1200 [==============================] - 1s 651us/sample - loss: 2.4060 - accuracy: 0.1083 - val_loss: 2.4034 - val_accuracy: 0.0733

Epoch 2/300

1200/1200 [==============================] - 0s 146us/sample - loss: 2.3483 - accuracy: 0.1083 - val_loss: 2.3558 - val_accuracy: 0.0733

Epoch 3/300

1200/1200 [==============================] - 0s 95us/sample - loss: 2.3228 - accuracy: 0.1083 - val_loss: 2.3319 - val_accuracy: 0.0733

Epoch 4/300

1200/1200 [==============================] - 0s 94us/sample - loss: 2.3105 - accuracy: 0.1083 - val_loss: 2.3200 - val_accuracy: 0.0733

Epoch 5/300

1200/1200 [==============================] - 0s 93us/sample - loss: 2.3049 - accuracy: 0.1083 - val_loss: 2.3140 - val_accuracy: 0.0733

Epoch 6/300

1200/1200 [==============================] - 0s 98us/sample - loss: 2.3020 - accuracy: 0.1083 - val_loss: 2.3091 - val_accuracy: 0.0733

Epoch 7/300

1200/1200 [==============================] - 0s 103us/sample - loss: 2.3004 - accuracy: 0.1175 - val_loss: 2.3061 - val_accuracy: 0.1367

Epoch 8/300

1200/1200 [==============================] - 0s 99us/sample - loss: 2.2997 - accuracy: 0.1208 - val_loss: 2.3052 - val_accuracy: 0.1367

Epoch 9/300

1200/1200 [==============================] - 0s 89us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3035 - val_accuracy: 0.1367

Epoch 10/300

1200/1200 [==============================] - 0s 79us/sample - loss: 2.2993 - accuracy: 0.1208 - val_loss: 2.3022 - val_accuracy: 0.1367

Epoch 11/300

1200/1200 [==============================] - 0s 76us/sample - loss: 2.2987 - accuracy: 0.1208 - val_loss: 2.3025 - val_accuracy: 0.1367

Epoch 12/300

1200/1200 [==============================] - 0s 87us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3021 - val_accuracy: 0.1367

Epoch 13/300

1200/1200 [==============================] - 0s 79us/sample - loss: 2.2986 - accuracy: 0.1208 - val_loss: 2.3020 - val_accuracy: 0.1367

Epoch 14/300

1200/1200 [==============================] - 0s 88us/sample - loss: 2.2988 - accuracy: 0.1208 - val_loss: 2.3013 - val_accuracy: 0.1367

Epoch 15/300

1200/1200 [==============================] - 0s 80us/sample - loss: 2.2994 - accuracy: 0.1208 - val_loss: 2.3014 - val_accuracy: 0.1367

Epoch 16/300

1200/1200 [==============================] - 0s 86us/sample - loss: 2.2985 - accuracy: 0.1208 - val_loss: 2.3012 - val_accuracy: 0.1367

Epoch 17/300

1200/1200 [==============================] - 0s 98us/sample - loss: 2.2986 - accuracy: 0.1208 - val_loss: 2.3021 - val_accuracy: 0.1367

Epoch 18/300

1200/1200 [==============================] - 0s 93us/sample - loss: 2.2988 - accuracy: 0.1208 - val_loss: 2.3014 - val_accuracy: 0.1367

Epoch 19/300

1200/1200 [==============================] - 0s 75us/sample - loss: 2.2987 - accuracy: 0.1208 - val_loss: 2.3014 - val_accuracy: 0.1367

Epoch 20/300

1200/1200 [==============================] - 0s 87us/sample - loss: 2.2989 - accuracy: 0.1208 - val_loss: 2.3015 - val_accuracy: 0.1367

Epoch 21/300

1200/1200 [==============================] - 0s 77us/sample - loss: 2.2989 - accuracy: 0.1208 - val_loss: 2.3010 - val_accuracy: 0.1367

Epoch 22/300

1200/1200 [==============================] - 0s 79us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3015 - val_accuracy: 0.1367

Epoch 23/300

1200/1200 [==============================] - 0s 89us/sample - loss: 2.2987 - accuracy: 0.1208 - val_loss: 2.3022 - val_accuracy: 0.1367

Epoch 24/300

1200/1200 [==============================] - 0s 85us/sample - loss: 2.2988 - accuracy: 0.1208 - val_loss: 2.3016 - val_accuracy: 0.1367

Epoch 25/300

1200/1200 [==============================] - 0s 88us/sample - loss: 2.2988 - accuracy: 0.1208 - val_loss: 2.3012 - val_accuracy: 0.1367

Epoch 26/300

1200/1200 [==============================] - 0s 86us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3022 - val_accuracy: 0.1367

Epoch 27/300

1200/1200 [==============================] - 0s 88us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3009 - val_accuracy: 0.1367

Epoch 28/300

1200/1200 [==============================] - 0s 83us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3017 - val_accuracy: 0.1367

Epoch 29/300

1200/1200 [==============================] - 0s 79us/sample - loss: 2.2992 - accuracy: 0.1208 - val_loss: 2.3021 - val_accuracy: 0.1367

Epoch 30/300

1200/1200 [==============================] - 0s 78us/sample - loss: 2.2989 - accuracy: 0.1208 - val_loss: 2.3005 - val_accuracy: 0.1367

Epoch 31/300

1200/1200 [==============================] - 0s 86us/sample - loss: 2.2997 - accuracy: 0.1208 - val_loss: 2.3007 - val_accuracy: 0.1367

Epoch 32/300

1200/1200 [==============================] - 0s 85us/sample - loss: 2.2987 - accuracy: 0.1208 - val_loss: 2.3009 - val_accuracy: 0.1367

Epoch 33/300

1200/1200 [==============================] - 0s 78us/sample - loss: 2.2989 - accuracy: 0.1208 - val_loss: 2.3012 - val_accuracy: 0.1367

Epoch 34/300

1200/1200 [==============================] - 0s 86us/sample - loss: 2.2986 - accuracy: 0.1208 - val_loss: 2.3012 - val_accuracy: 0.1367

Epoch 35/300

1200/1200 [==============================] - 0s 77us/sample - loss: 2.2992 - accuracy: 0.1208 - val_loss: 2.3008 - val_accuracy: 0.1367

Epoch 36/300

1200/1200 [==============================] - 0s 90us/sample - loss: 2.2989 - accuracy: 0.1208 - val_loss: 2.3013 - val_accuracy: 0.1367

Epoch 37/300

1200/1200 [==============================] - 0s 86us/sample - loss: 2.2990 - accuracy: 0.1208 - val_loss: 2.3010 - val_accuracy: 0.1367

Epoch 38/300

1200/1200 [==============================] - 0s 79us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3022 - val_accuracy: 0.1367

Epoch 39/300

1200/1200 [==============================] - 0s 81us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3015 - val_accuracy: 0.1367

Epoch 40/300

1200/1200 [==============================] - 0s 79us/sample - loss: 2.2992 - accuracy: 0.1208 - val_loss: 2.2998 - val_accuracy: 0.1367

Epoch 41/300

1200/1200 [==============================] - 0s 87us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3014 - val_accuracy: 0.1367

Epoch 42/300

1200/1200 [==============================] - 0s 81us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3010 - val_accuracy: 0.1367

Epoch 43/300

1200/1200 [==============================] - 0s 96us/sample - loss: 2.2990 - accuracy: 0.1208 - val_loss: 2.3010 - val_accuracy: 0.1367

Epoch 44/300

1200/1200 [==============================] - 0s 85us/sample - loss: 2.2986 - accuracy: 0.1208 - val_loss: 2.3009 - val_accuracy: 0.1367

Epoch 45/300

1200/1200 [==============================] - 0s 78us/sample - loss: 2.2990 - accuracy: 0.1208 - val_loss: 2.3024 - val_accuracy: 0.1367

Epoch 46/300

1200/1200 [==============================] - 0s 95us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3013 - val_accuracy: 0.1367

Epoch 47/300

1200/1200 [==============================] - 0s 83us/sample - loss: 2.2994 - accuracy: 0.1208 - val_loss: 2.3003 - val_accuracy: 0.1367

Epoch 48/300

1200/1200 [==============================] - 0s 79us/sample - loss: 2.2987 - accuracy: 0.1208 - val_loss: 2.3014 - val_accuracy: 0.1367

Epoch 49/300

1200/1200 [==============================] - 0s 82us/sample - loss: 2.2990 - accuracy: 0.1208 - val_loss: 2.3015 - val_accuracy: 0.1367

Epoch 50/300

1200/1200 [==============================] - 0s 83us/sample - loss: 2.2990 - accuracy: 0.1208 - val_loss: 2.3017 - val_accuracy: 0.1367

Epoch 51/300

1200/1200 [==============================] - 0s 79us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3007 - val_accuracy: 0.1367

Epoch 52/300

1200/1200 [==============================] - 0s 77us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3006 - val_accuracy: 0.1367

Epoch 53/300

1200/1200 [==============================] - 0s 86us/sample - loss: 2.2988 - accuracy: 0.1208 - val_loss: 2.3013 - val_accuracy: 0.1367

Epoch 54/300

1200/1200 [==============================] - 0s 78us/sample - loss: 2.2987 - accuracy: 0.1208 - val_loss: 2.3014 - val_accuracy: 0.1367

Epoch 55/300

1200/1200 [==============================] - 0s 100us/sample - loss: 2.2988 - accuracy: 0.1208 - val_loss: 2.3018 - val_accuracy: 0.1367

Epoch 56/300

1200/1200 [==============================] - 0s 95us/sample - loss: 2.2988 - accuracy: 0.1208 - val_loss: 2.3012 - val_accuracy: 0.1367

Epoch 57/300

1200/1200 [==============================] - 0s 98us/sample - loss: 2.2991 - accuracy: 0.1208 - val_loss: 2.3012 - val_accuracy: 0.1367

Epoch 58/300

1200/1200 [==============================] - 0s 79us/sample - loss: 2.2993 - accuracy: 0.1208 - val_loss: 2.3025 - val_accuracy: 0.1367

Epoch 59/300

1200/1200 [==============================] - 0s 75us/sample - loss: 2.2987 - accuracy: 0.1208 - val_loss: 2.3010 - val_accuracy: 0.1367

Epoch 60/300

1200/1200 [==============================] - 0s 73us/sample - loss: 2.2992 - accuracy: 0.1208 - val_loss: 2.3007 - val_accuracy: 0.1367

Epoch 61/300

1200/1200 [==============================] - 0s 70us/sample - loss: 2.2989 - accuracy: 0.1208 - val_loss: 2.3018 - val_accuracy: 0.1367

Epoch 62/300

1200/1200 [==============================] - 0s 78us/sample - loss: 2.2987 - accuracy: 0.1208 - val_loss: 2.3013 - val_accuracy: 0.1367

Epoch 63/300

1200/1200 [==============================] - 0s 71us/sample - loss: 2.2989 - accuracy: 0.1208 - val_loss: 2.3015 - val_accuracy: 0.1367

Epoch 64/300

1200/1200 [==============================] - 0s 75us/sample - loss: 2.2987 - accuracy: 0.1208 - val_loss: 2.3009 - val_accuracy: 0.1367

Epoch 65/300

1200/1200 [==============================] - 0s 80us/sample - loss: 2.2988 - accuracy: 0.1208 - val_loss: 2.3012 - val_accuracy: 0.1367

Epoch 66/300

1200/1200 [==============================] - 0s 92us/sample - loss: 2.2986 - accuracy: 0.1208 - val_loss: 2.2998 - val_accuracy: 0.1367

Epoch 67/300

1200/1200 [==============================] - 0s 123us/sample - loss: 2.2981 - accuracy: 0.1208 - val_loss: 2.3011 - val_accuracy: 0.1367

Epoch 68/300

1200/1200 [==============================] - 0s 87us/sample - loss: 2.2979 - accuracy: 0.1208 - val_loss: 2.2991 - val_accuracy: 0.1367

Epoch 69/300

1200/1200 [==============================] - 0s 95us/sample - loss: 2.2965 - accuracy: 0.1208 - val_loss: 2.2994 - val_accuracy: 0.1367

Epoch 70/300

1200/1200 [==============================] - 0s 120us/sample - loss: 2.2942 - accuracy: 0.1208 - val_loss: 2.2961 - val_accuracy: 0.1367

Epoch 71/300

1200/1200 [==============================] - 0s 88us/sample - loss: 2.2903 - accuracy: 0.1208 - val_loss: 2.2911 - val_accuracy: 0.1367

Epoch 72/300

1200/1200 [==============================] - 0s 77us/sample - loss: 2.2834 - accuracy: 0.1208 - val_loss: 2.2844 - val_accuracy: 0.1367

Epoch 73/300

1200/1200 [==============================] - 0s 80us/sample - loss: 2.2721 - accuracy: 0.1892 - val_loss: 2.2724 - val_accuracy: 0.1967

Epoch 74/300

1200/1200 [==============================] - 0s 78us/sample - loss: 2.2529 - accuracy: 0.2208 - val_loss: 2.2524 - val_accuracy: 0.1867

Epoch 75/300

1200/1200 [==============================] - 0s 81us/sample - loss: 2.2274 - accuracy: 0.2167 - val_loss: 2.2272 - val_accuracy: 0.1933

Epoch 76/300

1200/1200 [==============================] - 0s 91us/sample - loss: 2.1899 - accuracy: 0.2175 - val_loss: 2.1966 - val_accuracy: 0.1900

Epoch 77/300

1200/1200 [==============================] - 0s 82us/sample - loss: 2.1599 - accuracy: 0.2125 - val_loss: 2.1768 - val_accuracy: 0.1867

Epoch 78/300

1200/1200 [==============================] - 0s 80us/sample - loss: 2.1414 - accuracy: 0.1942 - val_loss: 2.1362 - val_accuracy: 0.1867

Epoch 79/300

1200/1200 [==============================] - 0s 80us/sample - loss: 2.1016 - accuracy: 0.2142 - val_loss: 2.1332 - val_accuracy: 0.1767

Epoch 80/300

1200/1200 [==============================] - 0s 73us/sample - loss: 2.0676 - accuracy: 0.2167 - val_loss: 2.0811 - val_accuracy: 0.1900

Epoch 81/300

1200/1200 [==============================] - 0s 72us/sample - loss: 2.0350 - accuracy: 0.2158 - val_loss: 2.0669 - val_accuracy: 0.1900

Epoch 82/300

1200/1200 [==============================] - 0s 77us/sample - loss: 2.0002 - accuracy: 0.2192 - val_loss: 2.0515 - val_accuracy: 0.1900

Epoch 83/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.9843 - accuracy: 0.2192 - val_loss: 2.1011 - val_accuracy: 0.1900

Epoch 84/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.9793 - accuracy: 0.2150 - val_loss: 2.0330 - val_accuracy: 0.1867

Epoch 85/300

1200/1200 [==============================] - 0s 77us/sample - loss: 1.9727 - accuracy: 0.2200 - val_loss: 2.0690 - val_accuracy: 0.1900

Epoch 86/300

1200/1200 [==============================] - 0s 145us/sample - loss: 1.9660 - accuracy: 0.2183 - val_loss: 2.0241 - val_accuracy: 0.1900

Epoch 87/300

1200/1200 [==============================] - 0s 90us/sample - loss: 1.9452 - accuracy: 0.2183 - val_loss: 2.0296 - val_accuracy: 0.1900

Epoch 88/300

1200/1200 [==============================] - 0s 74us/sample - loss: 1.9365 - accuracy: 0.2192 - val_loss: 2.0107 - val_accuracy: 0.1800

Epoch 89/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.9346 - accuracy: 0.2175 - val_loss: 2.0179 - val_accuracy: 0.1900

Epoch 90/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.9975 - accuracy: 0.2142 - val_loss: 2.0609 - val_accuracy: 0.1800

Epoch 91/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.9717 - accuracy: 0.2133 - val_loss: 2.0486 - val_accuracy: 0.1833

Epoch 92/300

1200/1200 [==============================] - 0s 77us/sample - loss: 1.9351 - accuracy: 0.2158 - val_loss: 2.0209 - val_accuracy: 0.1833

Epoch 93/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.9267 - accuracy: 0.2183 - val_loss: 2.0260 - val_accuracy: 0.1867

Epoch 94/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.9077 - accuracy: 0.2175 - val_loss: 2.0094 - val_accuracy: 0.1833

Epoch 95/300

1200/1200 [==============================] - 0s 78us/sample - loss: 1.9248 - accuracy: 0.2142 - val_loss: 1.9952 - val_accuracy: 0.1833

Epoch 96/300

1200/1200 [==============================] - 0s 91us/sample - loss: 1.9120 - accuracy: 0.2158 - val_loss: 2.0209 - val_accuracy: 0.1833

Epoch 97/300

1200/1200 [==============================] - 0s 88us/sample - loss: 1.9041 - accuracy: 0.2175 - val_loss: 2.0068 - val_accuracy: 0.1800

Epoch 98/300

1200/1200 [==============================] - 0s 99us/sample - loss: 1.9082 - accuracy: 0.2150 - val_loss: 1.9851 - val_accuracy: 0.1833

Epoch 99/300

1200/1200 [==============================] - 0s 102us/sample - loss: 1.9112 - accuracy: 0.2142 - val_loss: 2.0040 - val_accuracy: 0.1833

Epoch 100/300

1200/1200 [==============================] - 0s 97us/sample - loss: 1.9115 - accuracy: 0.2158 - val_loss: 2.0031 - val_accuracy: 0.1833

Epoch 101/300

1200/1200 [==============================] - 0s 89us/sample - loss: 1.9033 - accuracy: 0.2158 - val_loss: 2.0025 - val_accuracy: 0.1833

Epoch 102/300

1200/1200 [==============================] - 0s 78us/sample - loss: 1.9050 - accuracy: 0.2167 - val_loss: 1.9681 - val_accuracy: 0.1867

Epoch 103/300

1200/1200 [==============================] - 0s 84us/sample - loss: 1.9134 - accuracy: 0.2125 - val_loss: 1.9681 - val_accuracy: 0.1800

Epoch 104/300

1200/1200 [==============================] - 0s 80us/sample - loss: 1.9077 - accuracy: 0.2133 - val_loss: 1.9803 - val_accuracy: 0.1767

Epoch 105/300

1200/1200 [==============================] - 0s 94us/sample - loss: 1.9369 - accuracy: 0.2108 - val_loss: 2.0576 - val_accuracy: 0.1900

Epoch 106/300

1200/1200 [==============================] - 0s 83us/sample - loss: 1.9377 - accuracy: 0.2117 - val_loss: 1.9591 - val_accuracy: 0.1900

Epoch 107/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.8826 - accuracy: 0.2092 - val_loss: 1.9718 - val_accuracy: 0.2000

Epoch 108/300

1200/1200 [==============================] - 0s 77us/sample - loss: 1.8820 - accuracy: 0.2200 - val_loss: 1.9902 - val_accuracy: 0.1900

Epoch 109/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8769 - accuracy: 0.2175 - val_loss: 2.0173 - val_accuracy: 0.1933

Epoch 110/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.9608 - accuracy: 0.2017 - val_loss: 1.9729 - val_accuracy: 0.1967

Epoch 111/300

1200/1200 [==============================] - 0s 116us/sample - loss: 1.8808 - accuracy: 0.2125 - val_loss: 1.9769 - val_accuracy: 0.1833

Epoch 112/300

1200/1200 [==============================] - 0s 86us/sample - loss: 1.8805 - accuracy: 0.2150 - val_loss: 1.9790 - val_accuracy: 0.1867

Epoch 113/300

1200/1200 [==============================] - 0s 103us/sample - loss: 1.8834 - accuracy: 0.2175 - val_loss: 1.9581 - val_accuracy: 0.1900

Epoch 114/300

1200/1200 [==============================] - 0s 88us/sample - loss: 1.8809 - accuracy: 0.2175 - val_loss: 1.9681 - val_accuracy: 0.1867

Epoch 115/300

1200/1200 [==============================] - 0s 84us/sample - loss: 1.8578 - accuracy: 0.2200 - val_loss: 1.9434 - val_accuracy: 0.1967

Epoch 116/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.8657 - accuracy: 0.2192 - val_loss: 1.9675 - val_accuracy: 0.1867

Epoch 117/300

1200/1200 [==============================] - 0s 83us/sample - loss: 1.8694 - accuracy: 0.2175 - val_loss: 1.9602 - val_accuracy: 0.1867

Epoch 118/300

1200/1200 [==============================] - 0s 88us/sample - loss: 1.8595 - accuracy: 0.2175 - val_loss: 1.9881 - val_accuracy: 0.1900

Epoch 119/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8951 - accuracy: 0.2150 - val_loss: 2.0302 - val_accuracy: 0.1933

Epoch 120/300

1200/1200 [==============================] - 0s 78us/sample - loss: 1.8800 - accuracy: 0.2167 - val_loss: 1.9643 - val_accuracy: 0.1900

Epoch 121/300

1200/1200 [==============================] - 0s 80us/sample - loss: 1.8553 - accuracy: 0.2183 - val_loss: 1.9638 - val_accuracy: 0.1900

Epoch 122/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.8545 - accuracy: 0.2183 - val_loss: 1.9543 - val_accuracy: 0.1900

Epoch 123/300

1200/1200 [==============================] - 0s 127us/sample - loss: 1.8503 - accuracy: 0.2183 - val_loss: 1.9554 - val_accuracy: 0.1933

Epoch 124/300

1200/1200 [==============================] - 0s 111us/sample - loss: 1.8555 - accuracy: 0.2183 - val_loss: 1.9528 - val_accuracy: 0.1933

Epoch 125/300

1200/1200 [==============================] - 0s 96us/sample - loss: 1.8701 - accuracy: 0.2183 - val_loss: 1.9569 - val_accuracy: 0.1933

Epoch 126/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8521 - accuracy: 0.2200 - val_loss: 1.9638 - val_accuracy: 0.1900

Epoch 127/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8495 - accuracy: 0.2200 - val_loss: 1.9522 - val_accuracy: 0.1933

Epoch 128/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.8432 - accuracy: 0.2217 - val_loss: 1.9532 - val_accuracy: 0.1933

Epoch 129/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8444 - accuracy: 0.2108 - val_loss: 1.9593 - val_accuracy: 0.1933

Epoch 130/300

1200/1200 [==============================] - 0s 86us/sample - loss: 1.8852 - accuracy: 0.2158 - val_loss: 2.0278 - val_accuracy: 0.1933

Epoch 131/300

1200/1200 [==============================] - 0s 94us/sample - loss: 1.8620 - accuracy: 0.2192 - val_loss: 1.9660 - val_accuracy: 0.1900

Epoch 132/300

1200/1200 [==============================] - 0s 109us/sample - loss: 1.8459 - accuracy: 0.2208 - val_loss: 1.9659 - val_accuracy: 0.1900

Epoch 133/300

1200/1200 [==============================] - 0s 102us/sample - loss: 1.8448 - accuracy: 0.2208 - val_loss: 1.9651 - val_accuracy: 0.1900

Epoch 134/300

1200/1200 [==============================] - 0s 111us/sample - loss: 1.8442 - accuracy: 0.2208 - val_loss: 1.9660 - val_accuracy: 0.1900

Epoch 135/300

1200/1200 [==============================] - 0s 84us/sample - loss: 1.8438 - accuracy: 0.2208 - val_loss: 1.9655 - val_accuracy: 0.1900

Epoch 136/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.8432 - accuracy: 0.2208 - val_loss: 1.9669 - val_accuracy: 0.1900

Epoch 137/300

1200/1200 [==============================] - 0s 78us/sample - loss: 1.8430 - accuracy: 0.2208 - val_loss: 1.9678 - val_accuracy: 0.1900

Epoch 138/300

1200/1200 [==============================] - 0s 75us/sample - loss: 1.8430 - accuracy: 0.2208 - val_loss: 1.9682 - val_accuracy: 0.1900

Epoch 139/300

1200/1200 [==============================] - 0s 77us/sample - loss: 1.8407 - accuracy: 0.2075 - val_loss: 1.9602 - val_accuracy: 0.1900

Epoch 140/300

1200/1200 [==============================] - 0s 77us/sample - loss: 1.9064 - accuracy: 0.2175 - val_loss: 1.9759 - val_accuracy: 0.1867

Epoch 141/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8976 - accuracy: 0.2183 - val_loss: 1.9767 - val_accuracy: 0.1833

Epoch 142/300

1200/1200 [==============================] - 0s 75us/sample - loss: 1.8879 - accuracy: 0.2183 - val_loss: 1.9758 - val_accuracy: 0.1833

Epoch 143/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8791 - accuracy: 0.2183 - val_loss: 1.9566 - val_accuracy: 0.1933

Epoch 144/300

1200/1200 [==============================] - 0s 90us/sample - loss: 1.8941 - accuracy: 0.2167 - val_loss: 2.0898 - val_accuracy: 0.1900

Epoch 145/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8760 - accuracy: 0.2167 - val_loss: 1.9859 - val_accuracy: 0.1833

Epoch 146/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8633 - accuracy: 0.2192 - val_loss: 1.9945 - val_accuracy: 0.1900

Epoch 147/300

1200/1200 [==============================] - 0s 109us/sample - loss: 1.8580 - accuracy: 0.2208 - val_loss: 1.9748 - val_accuracy: 0.1900

Epoch 148/300

1200/1200 [==============================] - 0s 86us/sample - loss: 1.8604 - accuracy: 0.2217 - val_loss: 1.9767 - val_accuracy: 0.1900

Epoch 149/300

1200/1200 [==============================] - 0s 72us/sample - loss: 1.8514 - accuracy: 0.2217 - val_loss: 1.9815 - val_accuracy: 0.1900

Epoch 150/300

1200/1200 [==============================] - 0s 91us/sample - loss: 1.8605 - accuracy: 0.2200 - val_loss: 1.9907 - val_accuracy: 0.1900

Epoch 151/300

1200/1200 [==============================] - 0s 74us/sample - loss: 1.8762 - accuracy: 0.2167 - val_loss: 1.9933 - val_accuracy: 0.1833

Epoch 152/300

1200/1200 [==============================] - 0s 75us/sample - loss: 1.8940 - accuracy: 0.2142 - val_loss: 1.9884 - val_accuracy: 0.1800

Epoch 153/300

1200/1200 [==============================] - 0s 74us/sample - loss: 1.8605 - accuracy: 0.2142 - val_loss: 1.9949 - val_accuracy: 0.1800

Epoch 154/300

1200/1200 [==============================] - 0s 90us/sample - loss: 1.8479 - accuracy: 0.2125 - val_loss: 1.9862 - val_accuracy: 0.1867

Epoch 155/300

1200/1200 [==============================] - 0s 74us/sample - loss: 1.8471 - accuracy: 0.2217 - val_loss: 1.9922 - val_accuracy: 0.1833

Epoch 156/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.8567 - accuracy: 0.2025 - val_loss: 2.0004 - val_accuracy: 0.1833

Epoch 157/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.8575 - accuracy: 0.2217 - val_loss: 2.0125 - val_accuracy: 0.1867

Epoch 158/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.8412 - accuracy: 0.2242 - val_loss: 1.9838 - val_accuracy: 0.1800

Epoch 159/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8577 - accuracy: 0.2192 - val_loss: 1.9833 - val_accuracy: 0.1800

Epoch 160/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8519 - accuracy: 0.2192 - val_loss: 1.9968 - val_accuracy: 0.1800

Epoch 161/300

1200/1200 [==============================] - 0s 74us/sample - loss: 1.8751 - accuracy: 0.2167 - val_loss: 1.9861 - val_accuracy: 0.1800

Epoch 162/300

1200/1200 [==============================] - 0s 78us/sample - loss: 1.8681 - accuracy: 0.2183 - val_loss: 1.9815 - val_accuracy: 0.1767

Epoch 163/300

1200/1200 [==============================] - 0s 70us/sample - loss: 1.8543 - accuracy: 0.2208 - val_loss: 1.9673 - val_accuracy: 0.1900

Epoch 164/300

1200/1200 [==============================] - 0s 93us/sample - loss: 1.8358 - accuracy: 0.2225 - val_loss: 1.9659 - val_accuracy: 0.1900

Epoch 165/300

1200/1200 [==============================] - 0s 88us/sample - loss: 1.8482 - accuracy: 0.2225 - val_loss: 2.0217 - val_accuracy: 0.1900

Epoch 166/300

1200/1200 [==============================] - 0s 94us/sample - loss: 1.8488 - accuracy: 0.2217 - val_loss: 2.0187 - val_accuracy: 0.1933

Epoch 167/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.8617 - accuracy: 0.2225 - val_loss: 2.0132 - val_accuracy: 0.1933

Epoch 168/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8387 - accuracy: 0.2217 - val_loss: 2.0226 - val_accuracy: 0.1900

Epoch 169/300

1200/1200 [==============================] - 0s 92us/sample - loss: 1.8397 - accuracy: 0.2092 - val_loss: 1.9586 - val_accuracy: 0.1900

Epoch 170/300

1200/1200 [==============================] - 0s 96us/sample - loss: 1.9185 - accuracy: 0.2042 - val_loss: 2.0121 - val_accuracy: 0.1800

Epoch 171/300

1200/1200 [==============================] - 0s 105us/sample - loss: 1.8928 - accuracy: 0.2192 - val_loss: 1.9718 - val_accuracy: 0.1867

Epoch 172/300

1200/1200 [==============================] - 0s 104us/sample - loss: 1.8420 - accuracy: 0.2100 - val_loss: 1.9889 - val_accuracy: 0.1900

Epoch 173/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.8351 - accuracy: 0.2225 - val_loss: 1.9906 - val_accuracy: 0.1900

Epoch 174/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8286 - accuracy: 0.2225 - val_loss: 1.9779 - val_accuracy: 0.1933

Epoch 175/300

1200/1200 [==============================] - 0s 85us/sample - loss: 1.8306 - accuracy: 0.2225 - val_loss: 1.9559 - val_accuracy: 0.1933

Epoch 176/300

1200/1200 [==============================] - 0s 82us/sample - loss: 1.8241 - accuracy: 0.2225 - val_loss: 1.9723 - val_accuracy: 0.1900

Epoch 177/300

1200/1200 [==============================] - 0s 136us/sample - loss: 1.8254 - accuracy: 0.2217 - val_loss: 2.0305 - val_accuracy: 0.1900

Epoch 178/300

1200/1200 [==============================] - 0s 97us/sample - loss: 1.8396 - accuracy: 0.2217 - val_loss: 1.9988 - val_accuracy: 0.1867

Epoch 179/300

1200/1200 [==============================] - 0s 110us/sample - loss: 1.8355 - accuracy: 0.2100 - val_loss: 1.9613 - val_accuracy: 0.1867

Epoch 180/300

1200/1200 [==============================] - 0s 66us/sample - loss: 1.8311 - accuracy: 0.2117 - val_loss: 1.9697 - val_accuracy: 0.1867

Epoch 181/300

1200/1200 [==============================] - 0s 63us/sample - loss: 1.8299 - accuracy: 0.2225 - val_loss: 1.9758 - val_accuracy: 0.1900

Epoch 182/300

1200/1200 [==============================] - 0s 66us/sample - loss: 1.8364 - accuracy: 0.2208 - val_loss: 1.9917 - val_accuracy: 0.1867

Epoch 183/300

1200/1200 [==============================] - 0s 65us/sample - loss: 1.8319 - accuracy: 0.2225 - val_loss: 2.0343 - val_accuracy: 0.1833

Epoch 184/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8553 - accuracy: 0.2217 - val_loss: 1.9863 - val_accuracy: 0.1867

Epoch 185/300

1200/1200 [==============================] - 0s 87us/sample - loss: 1.8457 - accuracy: 0.2200 - val_loss: 1.9804 - val_accuracy: 0.1833

Epoch 186/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.8398 - accuracy: 0.2067 - val_loss: 1.9800 - val_accuracy: 0.1867

Epoch 187/300

1200/1200 [==============================] - 0s 78us/sample - loss: 1.8420 - accuracy: 0.2100 - val_loss: 1.9673 - val_accuracy: 0.1867

Epoch 188/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.8277 - accuracy: 0.2225 - val_loss: 1.9874 - val_accuracy: 0.1867

Epoch 189/300

1200/1200 [==============================] - 0s 72us/sample - loss: 1.8313 - accuracy: 0.2217 - val_loss: 1.9742 - val_accuracy: 0.1867

Epoch 190/300

1200/1200 [==============================] - 0s 72us/sample - loss: 1.8240 - accuracy: 0.2225 - val_loss: 1.9724 - val_accuracy: 0.1900

Epoch 191/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.8232 - accuracy: 0.2225 - val_loss: 1.9720 - val_accuracy: 0.1867

Epoch 192/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.8226 - accuracy: 0.2225 - val_loss: 1.9721 - val_accuracy: 0.1867

Epoch 193/300

1200/1200 [==============================] - 0s 73us/sample - loss: 1.8224 - accuracy: 0.2142 - val_loss: 1.9728 - val_accuracy: 0.1867

Epoch 194/300

1200/1200 [==============================] - 0s 85us/sample - loss: 1.8227 - accuracy: 0.2225 - val_loss: 1.9719 - val_accuracy: 0.1867

Epoch 195/300

1200/1200 [==============================] - 0s 89us/sample - loss: 1.8223 - accuracy: 0.2233 - val_loss: 1.9797 - val_accuracy: 0.1867

Epoch 196/300

1200/1200 [==============================] - 0s 80us/sample - loss: 1.8246 - accuracy: 0.2125 - val_loss: 1.9667 - val_accuracy: 0.2000

Epoch 197/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8246 - accuracy: 0.2233 - val_loss: 1.9738 - val_accuracy: 0.1867

Epoch 198/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.8242 - accuracy: 0.2225 - val_loss: 1.9749 - val_accuracy: 0.1867

Epoch 199/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.8237 - accuracy: 0.2225 - val_loss: 1.9751 - val_accuracy: 0.1867

Epoch 200/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.8220 - accuracy: 0.2183 - val_loss: 1.9761 - val_accuracy: 0.2000

Epoch 201/300

1200/1200 [==============================] - 0s 70us/sample - loss: 1.8220 - accuracy: 0.2167 - val_loss: 1.9754 - val_accuracy: 0.1867

Epoch 202/300

1200/1200 [==============================] - 0s 99us/sample - loss: 1.8222 - accuracy: 0.2225 - val_loss: 1.9754 - val_accuracy: 0.1867

Epoch 203/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8219 - accuracy: 0.2133 - val_loss: 1.9748 - val_accuracy: 0.1867

Epoch 204/300

1200/1200 [==============================] - 0s 77us/sample - loss: 1.8217 - accuracy: 0.2225 - val_loss: 1.9748 - val_accuracy: 0.1867

Epoch 205/300

1200/1200 [==============================] - 0s 87us/sample - loss: 1.8218 - accuracy: 0.2225 - val_loss: 1.9749 - val_accuracy: 0.1867

Epoch 206/300

1200/1200 [==============================] - 0s 90us/sample - loss: 1.8219 - accuracy: 0.2108 - val_loss: 1.9739 - val_accuracy: 0.1867

Epoch 207/300

1200/1200 [==============================] - 0s 82us/sample - loss: 1.8221 - accuracy: 0.2225 - val_loss: 1.9714 - val_accuracy: 0.1900

Epoch 208/300

1200/1200 [==============================] - 0s 78us/sample - loss: 1.8219 - accuracy: 0.2175 - val_loss: 1.9731 - val_accuracy: 0.2000

Epoch 209/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8206 - accuracy: 0.2158 - val_loss: 2.0009 - val_accuracy: 0.1867

Epoch 210/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8241 - accuracy: 0.2133 - val_loss: 2.0123 - val_accuracy: 0.2033

Epoch 211/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8302 - accuracy: 0.2175 - val_loss: 1.9725 - val_accuracy: 0.1900

Epoch 212/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.8232 - accuracy: 0.2208 - val_loss: 1.9676 - val_accuracy: 0.1933

Epoch 213/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.8314 - accuracy: 0.2208 - val_loss: 1.9646 - val_accuracy: 0.1833

Epoch 214/300

1200/1200 [==============================] - 0s 99us/sample - loss: 1.8412 - accuracy: 0.2125 - val_loss: 1.9890 - val_accuracy: 0.1900

Epoch 215/300

1200/1200 [==============================] - 0s 85us/sample - loss: 1.8199 - accuracy: 0.2225 - val_loss: 1.9928 - val_accuracy: 0.1900

Epoch 216/300

1200/1200 [==============================] - 0s 80us/sample - loss: 1.8175 - accuracy: 0.2225 - val_loss: 1.9923 - val_accuracy: 0.1900

Epoch 217/300

1200/1200 [==============================] - 0s 75us/sample - loss: 1.8230 - accuracy: 0.2142 - val_loss: 1.9780 - val_accuracy: 0.1900

Epoch 218/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.8272 - accuracy: 0.2225 - val_loss: 1.9601 - val_accuracy: 0.1900

Epoch 219/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.8187 - accuracy: 0.2217 - val_loss: 1.9708 - val_accuracy: 0.1867

Epoch 220/300

1200/1200 [==============================] - 0s 77us/sample - loss: 1.8191 - accuracy: 0.2150 - val_loss: 1.9720 - val_accuracy: 0.2067

Epoch 221/300

1200/1200 [==============================] - 0s 85us/sample - loss: 1.8210 - accuracy: 0.2175 - val_loss: 1.9725 - val_accuracy: 0.1867

Epoch 222/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.8351 - accuracy: 0.2200 - val_loss: 2.0701 - val_accuracy: 0.2067

Epoch 223/300

1200/1200 [==============================] - 0s 80us/sample - loss: 1.9326 - accuracy: 0.2208 - val_loss: 2.0633 - val_accuracy: 0.2033

Epoch 224/300

1200/1200 [==============================] - 0s 70us/sample - loss: 1.8287 - accuracy: 0.2217 - val_loss: 1.9644 - val_accuracy: 0.1867

Epoch 225/300

1200/1200 [==============================] - 0s 80us/sample - loss: 1.8126 - accuracy: 0.2225 - val_loss: 1.9478 - val_accuracy: 0.1933

Epoch 226/300

1200/1200 [==============================] - 0s 84us/sample - loss: 1.7974 - accuracy: 0.2167 - val_loss: 1.9703 - val_accuracy: 0.1933

Epoch 227/300

1200/1200 [==============================] - 0s 83us/sample - loss: 1.8058 - accuracy: 0.2233 - val_loss: 1.9449 - val_accuracy: 0.1933

Epoch 228/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.8028 - accuracy: 0.2158 - val_loss: 1.9611 - val_accuracy: 0.1933

Epoch 229/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.7992 - accuracy: 0.2233 - val_loss: 1.9618 - val_accuracy: 0.1933

Epoch 230/300

1200/1200 [==============================] - 0s 87us/sample - loss: 1.7986 - accuracy: 0.2242 - val_loss: 1.9631 - val_accuracy: 0.1933

Epoch 231/300

1200/1200 [==============================] - 0s 80us/sample - loss: 1.7984 - accuracy: 0.2150 - val_loss: 1.9418 - val_accuracy: 0.2067

Epoch 232/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8031 - accuracy: 0.2183 - val_loss: 1.9612 - val_accuracy: 0.1967

Epoch 233/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.7978 - accuracy: 0.2233 - val_loss: 1.9331 - val_accuracy: 0.1933

Epoch 234/300

1200/1200 [==============================] - 0s 72us/sample - loss: 1.7962 - accuracy: 0.2108 - val_loss: 1.9264 - val_accuracy: 0.1933

Epoch 235/300

1200/1200 [==============================] - 0s 78us/sample - loss: 1.8410 - accuracy: 0.2200 - val_loss: 1.9226 - val_accuracy: 0.1933

Epoch 236/300

1200/1200 [==============================] - 0s 86us/sample - loss: 1.7978 - accuracy: 0.2092 - val_loss: 1.9689 - val_accuracy: 0.1933

Epoch 237/300

1200/1200 [==============================] - 0s 85us/sample - loss: 1.7963 - accuracy: 0.2233 - val_loss: 1.9378 - val_accuracy: 0.1933

Epoch 238/300

1200/1200 [==============================] - 0s 80us/sample - loss: 1.7936 - accuracy: 0.2233 - val_loss: 1.9287 - val_accuracy: 0.2000

Epoch 239/300

1200/1200 [==============================] - 0s 71us/sample - loss: 1.7892 - accuracy: 0.2242 - val_loss: 1.9807 - val_accuracy: 0.1933

Epoch 240/300

1200/1200 [==============================] - 0s 82us/sample - loss: 1.8068 - accuracy: 0.2233 - val_loss: 1.9606 - val_accuracy: 0.1900

Epoch 241/300

1200/1200 [==============================] - 0s 92us/sample - loss: 1.7884 - accuracy: 0.2242 - val_loss: 1.9497 - val_accuracy: 0.1933

Epoch 242/300

1200/1200 [==============================] - 0s 90us/sample - loss: 1.7857 - accuracy: 0.2242 - val_loss: 1.9479 - val_accuracy: 0.1933

Epoch 243/300

1200/1200 [==============================] - 0s 96us/sample - loss: 1.7858 - accuracy: 0.2242 - val_loss: 1.9485 - val_accuracy: 0.1933

Epoch 244/300

1200/1200 [==============================] - 0s 91us/sample - loss: 1.7861 - accuracy: 0.2242 - val_loss: 1.9502 - val_accuracy: 0.1933

Epoch 245/300

1200/1200 [==============================] - 0s 97us/sample - loss: 1.7855 - accuracy: 0.2242 - val_loss: 1.9506 - val_accuracy: 0.1933

Epoch 246/300

1200/1200 [==============================] - 0s 116us/sample - loss: 1.7857 - accuracy: 0.2167 - val_loss: 1.9504 - val_accuracy: 0.1933

Epoch 247/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.7856 - accuracy: 0.2242 - val_loss: 1.9512 - val_accuracy: 0.1933

Epoch 248/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.7857 - accuracy: 0.2242 - val_loss: 1.9511 - val_accuracy: 0.1933

Epoch 249/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.7857 - accuracy: 0.2242 - val_loss: 1.9519 - val_accuracy: 0.1933

Epoch 250/300

1200/1200 [==============================] - 0s 79us/sample - loss: 1.7855 - accuracy: 0.2175 - val_loss: 1.9524 - val_accuracy: 0.2100

Epoch 251/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.7856 - accuracy: 0.2083 - val_loss: 1.9506 - val_accuracy: 0.1933

Epoch 252/300

1200/1200 [==============================] - 0s 100us/sample - loss: 1.7854 - accuracy: 0.2242 - val_loss: 1.9521 - val_accuracy: 0.1933

Epoch 253/300

1200/1200 [==============================] - 0s 83us/sample - loss: 1.7856 - accuracy: 0.2100 - val_loss: 1.9528 - val_accuracy: 0.1933

Epoch 254/300

1200/1200 [==============================] - 0s 102us/sample - loss: 1.7851 - accuracy: 0.2242 - val_loss: 1.9531 - val_accuracy: 0.1933

Epoch 255/300

1200/1200 [==============================] - 0s 92us/sample - loss: 1.7990 - accuracy: 0.2100 - val_loss: 1.9645 - val_accuracy: 0.2067

Epoch 256/300

1200/1200 [==============================] - 0s 89us/sample - loss: 1.7981 - accuracy: 0.2200 - val_loss: 1.9391 - val_accuracy: 0.2067

Epoch 257/300

1200/1200 [==============================] - 0s 83us/sample - loss: 1.8239 - accuracy: 0.2200 - val_loss: 1.9560 - val_accuracy: 0.1867

Epoch 258/300

1200/1200 [==============================] - 0s 75us/sample - loss: 1.7985 - accuracy: 0.2225 - val_loss: 1.9522 - val_accuracy: 0.1967

Epoch 259/300

1200/1200 [==============================] - 0s 132us/sample - loss: 1.7975 - accuracy: 0.2083 - val_loss: 1.9604 - val_accuracy: 0.1900

Epoch 260/300

1200/1200 [==============================] - 0s 100us/sample - loss: 1.8004 - accuracy: 0.2150 - val_loss: 1.9494 - val_accuracy: 0.1900

Epoch 261/300

1200/1200 [==============================] - 0s 84us/sample - loss: 1.7985 - accuracy: 0.2225 - val_loss: 1.9628 - val_accuracy: 0.1933

Epoch 262/300

1200/1200 [==============================] - 0s 84us/sample - loss: 1.8268 - accuracy: 0.2217 - val_loss: 1.9613 - val_accuracy: 0.1867

Epoch 263/300

1200/1200 [==============================] - 0s 77us/sample - loss: 1.8105 - accuracy: 0.2142 - val_loss: 1.9457 - val_accuracy: 0.2067

Epoch 264/300

1200/1200 [==============================] - 0s 72us/sample - loss: 1.8163 - accuracy: 0.2150 - val_loss: 1.9445 - val_accuracy: 0.1933

Epoch 265/300

1200/1200 [==============================] - 0s 85us/sample - loss: 1.8061 - accuracy: 0.2217 - val_loss: 1.9320 - val_accuracy: 0.1967

Epoch 266/300

1200/1200 [==============================] - 0s 82us/sample - loss: 1.8033 - accuracy: 0.2217 - val_loss: 1.9521 - val_accuracy: 0.1933

Epoch 267/300

1200/1200 [==============================] - 0s 83us/sample - loss: 1.8047 - accuracy: 0.2075 - val_loss: 1.9632 - val_accuracy: 0.1933

Epoch 268/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.8015 - accuracy: 0.2142 - val_loss: 1.9484 - val_accuracy: 0.1933

Epoch 269/300

1200/1200 [==============================] - 0s 82us/sample - loss: 1.7872 - accuracy: 0.2242 - val_loss: 1.9366 - val_accuracy: 0.1933

Epoch 270/300

1200/1200 [==============================] - 0s 94us/sample - loss: 1.7893 - accuracy: 0.2233 - val_loss: 1.9600 - val_accuracy: 0.1900

Epoch 271/300

1200/1200 [==============================] - 0s 90us/sample - loss: 1.7906 - accuracy: 0.2233 - val_loss: 1.9821 - val_accuracy: 0.1867

Epoch 272/300

1200/1200 [==============================] - 0s 105us/sample - loss: 1.8062 - accuracy: 0.2225 - val_loss: 1.9810 - val_accuracy: 0.1867

Epoch 273/300

1200/1200 [==============================] - 0s 114us/sample - loss: 1.7946 - accuracy: 0.2150 - val_loss: 1.9593 - val_accuracy: 0.2033

Epoch 274/300

1200/1200 [==============================] - 0s 124us/sample - loss: 1.7894 - accuracy: 0.2233 - val_loss: 1.9460 - val_accuracy: 0.1900

Epoch 275/300

1200/1200 [==============================] - 0s 105us/sample - loss: 1.8107 - accuracy: 0.2233 - val_loss: 1.9457 - val_accuracy: 0.1967

Epoch 276/300

1200/1200 [==============================] - 0s 87us/sample - loss: 1.7970 - accuracy: 0.2075 - val_loss: 1.9547 - val_accuracy: 0.1933

Epoch 277/300

1200/1200 [==============================] - 0s 75us/sample - loss: 1.7941 - accuracy: 0.2150 - val_loss: 1.9811 - val_accuracy: 0.2000

Epoch 278/300

1200/1200 [==============================] - 0s 87us/sample - loss: 1.7874 - accuracy: 0.2217 - val_loss: 1.9615 - val_accuracy: 0.1900

Epoch 279/300

1200/1200 [==============================] - 0s 102us/sample - loss: 1.7846 - accuracy: 0.2092 - val_loss: 1.9728 - val_accuracy: 0.1900

Epoch 280/300

1200/1200 [==============================] - 0s 98us/sample - loss: 1.7919 - accuracy: 0.2250 - val_loss: 1.9762 - val_accuracy: 0.1867

Epoch 281/300

1200/1200 [==============================] - 0s 85us/sample - loss: 1.7869 - accuracy: 0.2242 - val_loss: 1.9412 - val_accuracy: 0.1900

Epoch 282/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.7851 - accuracy: 0.2133 - val_loss: 1.9423 - val_accuracy: 0.1967

Epoch 283/300

1200/1200 [==============================] - 0s 105us/sample - loss: 1.7862 - accuracy: 0.2242 - val_loss: 1.9481 - val_accuracy: 0.1900

Epoch 284/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.7846 - accuracy: 0.2242 - val_loss: 1.9711 - val_accuracy: 0.1900

Epoch 285/300

1200/1200 [==============================] - 0s 78us/sample - loss: 1.7848 - accuracy: 0.2242 - val_loss: 1.9689 - val_accuracy: 0.1900

Epoch 286/300

1200/1200 [==============================] - 0s 83us/sample - loss: 1.7858 - accuracy: 0.2242 - val_loss: 1.9693 - val_accuracy: 0.1900

Epoch 287/300

1200/1200 [==============================] - 0s 80us/sample - loss: 1.7857 - accuracy: 0.2242 - val_loss: 1.9684 - val_accuracy: 0.1900

Epoch 288/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.7858 - accuracy: 0.2108 - val_loss: 1.9677 - val_accuracy: 0.2067

Epoch 289/300

1200/1200 [==============================] - 0s 85us/sample - loss: 1.7857 - accuracy: 0.2258 - val_loss: 1.9702 - val_accuracy: 0.1900

Epoch 290/300

1200/1200 [==============================] - 0s 95us/sample - loss: 1.7857 - accuracy: 0.2242 - val_loss: 1.9766 - val_accuracy: 0.1900

Epoch 291/300

1200/1200 [==============================] - 0s 88us/sample - loss: 1.7846 - accuracy: 0.2092 - val_loss: 1.9757 - val_accuracy: 0.1900

Epoch 292/300

1200/1200 [==============================] - 0s 115us/sample - loss: 1.7847 - accuracy: 0.2117 - val_loss: 1.9762 - val_accuracy: 0.1900

Epoch 293/300

1200/1200 [==============================] - 0s 81us/sample - loss: 1.7843 - accuracy: 0.2075 - val_loss: 1.9763 - val_accuracy: 0.1900

Epoch 294/300

1200/1200 [==============================] - 0s 70us/sample - loss: 1.7847 - accuracy: 0.2242 - val_loss: 1.9765 - val_accuracy: 0.1900

Epoch 295/300

1200/1200 [==============================] - 0s 78us/sample - loss: 1.7842 - accuracy: 0.2242 - val_loss: 1.9724 - val_accuracy: 0.1900

Epoch 296/300

1200/1200 [==============================] - 0s 75us/sample - loss: 1.7827 - accuracy: 0.2242 - val_loss: 1.9740 - val_accuracy: 0.1900

Epoch 297/300

1200/1200 [==============================] - 0s 86us/sample - loss: 1.7841 - accuracy: 0.2125 - val_loss: 1.9607 - val_accuracy: 0.1900

Epoch 298/300

1200/1200 [==============================] - 0s 83us/sample - loss: 1.7826 - accuracy: 0.2242 - val_loss: 1.9601 - val_accuracy: 0.1900

Epoch 299/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.7823 - accuracy: 0.2242 - val_loss: 1.9614 - val_accuracy: 0.1900

Epoch 300/300

1200/1200 [==============================] - 0s 76us/sample - loss: 1.7827 - accuracy: 0.2242 - val_loss: 1.9612 - val_accuracy: 0.1900

<tensorflow.python.keras.callbacks.History at 0x7fb4259a4550>

Experiment observations, on Tensorboard#

What is the distribution of the weights observed as we move from the output layer to the input layer for each experiment?

Look in Tensorboard at distributions or histograms charts named

Layer_00_Input/kernel_0,Layer_01_Hidden/kernel_0, etc. for different layers. You should see:Gradients are usually higher at the output layer and tend to decrease as you move backwards in the network.

With sigmoid activations gradients are always low and rapidly decay from the output layer all the way to the input layer.

Relu might still have some vanishing gradient when weights are <0.

Leaky Relu would probably have constant gradients across layers.

Recall that, in the backpropagation algorithm, the gradient of the loss function \(L\) with respect to the weights at a certain layer \(W_l\) is proportional to the derivatives and the weights of previous layers:

where \(f'\) is the derivative of the activation function and \(z^{(l)}\) is the output at layer \(l\).

Do you think the sigmoid longrun would reach levels comparable to Relu or Leaky Relu? At what computational cost?

%load_ext tensorboard

%tensorboard --logdir log