5.5 Sequences generation#

!wget -nc --no-cache -O init.py -q https://raw.githubusercontent.com/rramosp/2021.deeplearning/main/content/init.py

import init; init.init(force_download=False);

replicating local resources

Sampling#

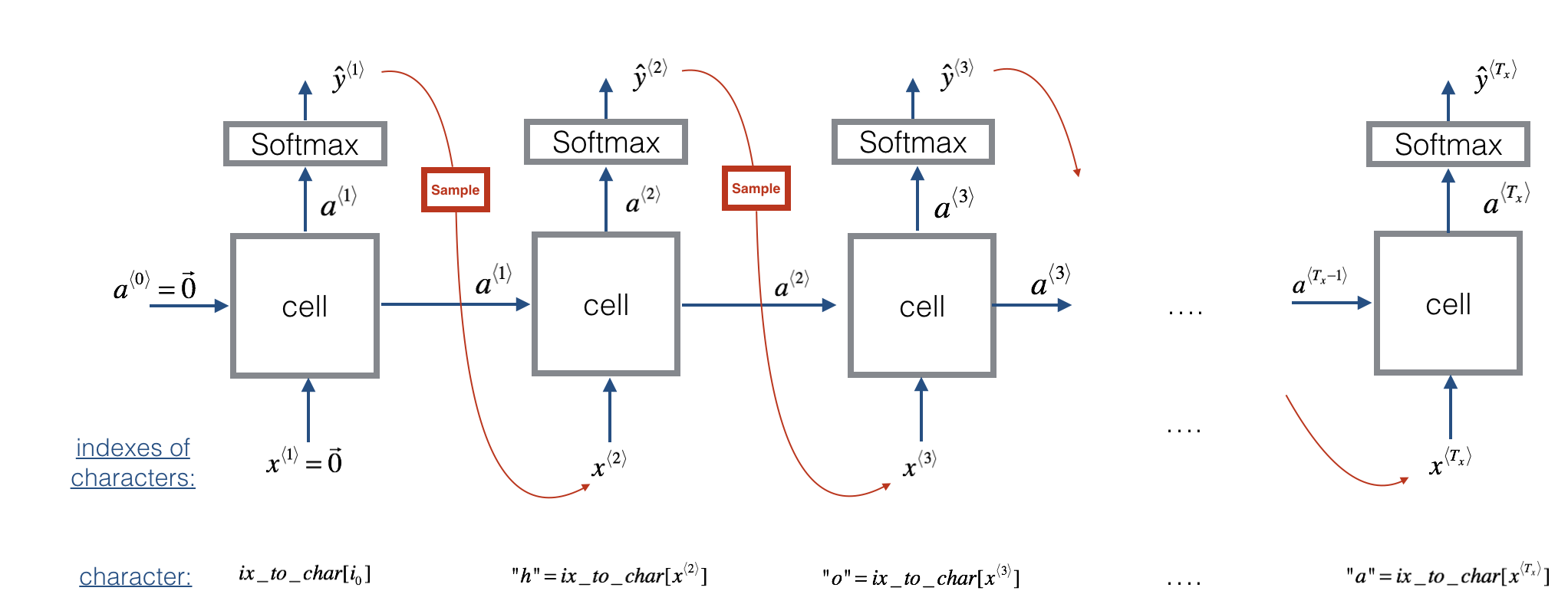

A recurrence Neural Network can be used as a Generative model once it was trained. Currently this is a common practice not only to study how well a model has learned a problem, but to learn more about the problem domain itself. In fact, this approach is being used for music generation and composition.

The process of generation is explained in the picture below:

from IPython.display import Image

Image(filename='local/imgs/dinos3.png', width=800)

#<img src="local/imgs/dinos3.png" style="width:500;height:300px;">

Let’s do an example:

import sys

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Masking, Dropout, LSTM, Input

from tensorflow.keras.optimizers import RMSprop

from tensorflow.keras.callbacks import ModelCheckpoint

import keras.utils as np_utils

import nltk

nltk.download('gutenberg')

[nltk_data] Downloading package gutenberg to /root/nltk_data...

[nltk_data] Unzipping corpora/gutenberg.zip.

True

# load ascii text and covert to lowercase

raw_text = nltk.corpus.gutenberg.raw('bible-kjv.txt')

raw_text[100:1000]

'Genesis\n\n\n1:1 In the beginning God created the heaven and the earth.\n\n1:2 And the earth was without form, and void; and darkness was upon\nthe face of the deep. And the Spirit of God moved upon the face of the\nwaters.\n\n1:3 And God said, Let there be light: and there was light.\n\n1:4 And God saw the light, that it was good: and God divided the light\nfrom the darkness.\n\n1:5 And God called the light Day, and the darkness he called Night.\nAnd the evening and the morning were the first day.\n\n1:6 And God said, Let there be a firmament in the midst of the waters,\nand let it divide the waters from the waters.\n\n1:7 And God made the firmament, and divided the waters which were\nunder the firmament from the waters which were above the firmament:\nand it was so.\n\n1:8 And God called the firmament Heaven. And the evening and the\nmorning were the second day.\n\n1:9 And God said, Let the waters under the heav'

# create mapping of unique chars to integers

chars = sorted(list(set(raw_text)))

char_to_int = dict((c, i) for i, c in enumerate(chars))

char_to_int

{'\n': 0,

' ': 1,

'!': 2,

"'": 3,

'(': 4,

')': 5,

',': 6,

'-': 7,

'.': 8,

'0': 9,

'1': 10,

'2': 11,

'3': 12,

'4': 13,

'5': 14,

'6': 15,

'7': 16,

'8': 17,

'9': 18,

':': 19,

';': 20,

'?': 21,

'A': 22,

'B': 23,

'C': 24,

'D': 25,

'E': 26,

'F': 27,

'G': 28,

'H': 29,

'I': 30,

'J': 31,

'K': 32,

'L': 33,

'M': 34,

'N': 35,

'O': 36,

'P': 37,

'Q': 38,

'R': 39,

'S': 40,

'T': 41,

'U': 42,

'V': 43,

'W': 44,

'Y': 45,

'Z': 46,

'[': 47,

']': 48,

'a': 49,

'b': 50,

'c': 51,

'd': 52,

'e': 53,

'f': 54,

'g': 55,

'h': 56,

'i': 57,

'j': 58,

'k': 59,

'l': 60,

'm': 61,

'n': 62,

'o': 63,

'p': 64,

'q': 65,

'r': 66,

's': 67,

't': 68,

'u': 69,

'v': 70,

'w': 71,

'x': 72,

'y': 73,

'z': 74}

n_chars = len(raw_text)

n_vocab = len(chars)

print("Total Characters: ", n_chars)

print("Total Vocab: ", n_vocab)

Total Characters: 4332554

Total Vocab: 75

To train the model we are going to use sequences of 60 characters and because of the data set is too large, we are going to use only the firs 200000 sequences.

# prepare the dataset of input to output pairs encoded as integers

seq_length = 60

dataX = []

dataY = []

n_chars = 200000

for i in range(0, n_chars - seq_length, 3):

seq_in = raw_text[i:i + seq_length]

seq_out = raw_text[i + seq_length]

dataX.append([char_to_int[char] for char in seq_in])

dataY.append(char_to_int[seq_out])

n_patterns = len(dataX)

print("Total Patterns: ", n_patterns)

Total Patterns: 66647

# reshape X to be [samples, time steps, features]

X = np.reshape(dataX, (n_patterns, seq_length, 1))

# normalize

X = X / float(n_vocab)

# one hot encode the output variable

y = np_utils.to_categorical(dataY)

int_to_char = dict((i, c) for i, c in enumerate(chars))

model = Sequential()

model.add(Input(shape=(X.shape[1], X.shape[2])))

model.add(LSTM(256))

model.add(Dense(y.shape[1], activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam')

X.shape

(66647, 60, 1)

Note that the entire dataset is used for training

model.fit(X, y, epochs=200, batch_size=128, verbose=1)

Epoch 1/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 7s 9ms/step - loss: 3.2394

Epoch 2/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.8380

Epoch 3/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.6651

Epoch 4/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.5972

Epoch 5/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.5402

Epoch 6/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.5043

Epoch 7/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.4655

Epoch 8/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.4288

Epoch 9/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.4015

Epoch 10/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.3626

Epoch 11/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.3428

Epoch 12/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 2.3193

Epoch 13/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.2862

Epoch 14/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 2.2651

Epoch 15/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.2432

Epoch 16/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.2204

Epoch 17/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 2.1990

Epoch 18/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.1699

Epoch 19/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.1396

Epoch 20/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.1321

Epoch 21/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.1024

Epoch 22/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.0806

Epoch 23/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.0819

Epoch 24/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.0450

Epoch 25/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.0350

Epoch 26/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 2.0037

Epoch 27/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.9811

Epoch 28/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.9615

Epoch 29/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.9455

Epoch 30/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.9273

Epoch 31/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.8968

Epoch 32/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.8813

Epoch 33/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.8621

Epoch 34/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.8444

Epoch 35/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.8197

Epoch 36/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.8057

Epoch 37/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.7844

Epoch 38/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.7618

Epoch 39/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.7459

Epoch 40/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.7293

Epoch 41/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.6968

Epoch 42/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.6714

Epoch 43/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.6687

Epoch 44/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.6505

Epoch 45/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.6269

Epoch 46/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.6167

Epoch 47/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.5986

Epoch 48/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.5741

Epoch 49/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.5755

Epoch 50/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.5455

Epoch 51/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.5258

Epoch 52/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.5070

Epoch 53/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.4997

Epoch 54/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.4799

Epoch 55/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.4692

Epoch 56/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.4617

Epoch 57/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.4405

Epoch 58/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.4272

Epoch 59/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.4093

Epoch 60/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.3963

Epoch 61/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.3829

Epoch 62/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.3615

Epoch 63/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.3552

Epoch 64/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.3416

Epoch 65/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.3192

Epoch 66/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.3203

Epoch 67/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.2948

Epoch 68/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.2755

Epoch 69/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.2827

Epoch 70/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.2739

Epoch 71/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.2782

Epoch 72/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.2668

Epoch 73/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.2423

Epoch 74/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.2238

Epoch 75/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.2224

Epoch 76/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.1871

Epoch 77/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.1871

Epoch 78/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.1770

Epoch 79/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.1803

Epoch 80/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.1528

Epoch 81/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.1450

Epoch 82/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.1419

Epoch 83/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.1529

Epoch 84/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.1328

Epoch 85/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.1066

Epoch 86/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.1042

Epoch 87/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0991

Epoch 88/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0804

Epoch 89/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0868

Epoch 90/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0557

Epoch 91/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0593

Epoch 92/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.0710

Epoch 93/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0527

Epoch 94/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0280

Epoch 95/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0371

Epoch 96/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0681

Epoch 97/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 1.0289

Epoch 98/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9916

Epoch 99/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0164

Epoch 100/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 1.0012

Epoch 101/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9892

Epoch 102/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.9922

Epoch 103/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9619

Epoch 104/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.9699

Epoch 105/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9584

Epoch 106/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9709

Epoch 107/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9623

Epoch 108/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9773

Epoch 109/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.9338

Epoch 110/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9301

Epoch 111/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.9390

Epoch 112/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9261

Epoch 113/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9335

Epoch 114/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.9233

Epoch 115/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9209

Epoch 116/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.8926

Epoch 117/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9061

Epoch 118/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.9191

Epoch 119/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8766

Epoch 120/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8668

Epoch 121/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.8790

Epoch 122/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8689

Epoch 123/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8483

Epoch 124/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8740

Epoch 125/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8635

Epoch 126/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.9182

Epoch 127/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8355

Epoch 128/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.8259

Epoch 129/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8357

Epoch 130/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8493

Epoch 131/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.8192

Epoch 132/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8251

Epoch 133/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.8240

Epoch 134/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8274

Epoch 135/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.8150

Epoch 136/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.8270

Epoch 137/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8199

Epoch 138/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7735

Epoch 139/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7903

Epoch 140/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7771

Epoch 141/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8136

Epoch 142/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7894

Epoch 143/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7768

Epoch 144/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.8003

Epoch 145/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7731

Epoch 146/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7675

Epoch 147/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7548

Epoch 148/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7397

Epoch 149/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7591

Epoch 150/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7482

Epoch 151/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7378

Epoch 152/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7479

Epoch 153/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7272

Epoch 154/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7635

Epoch 155/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7294

Epoch 156/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7394

Epoch 157/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7294

Epoch 158/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7369

Epoch 159/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7095

Epoch 160/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7222

Epoch 161/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6835

Epoch 162/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7216

Epoch 163/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6801

Epoch 164/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6978

Epoch 165/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6934

Epoch 166/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6849

Epoch 167/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.6904

Epoch 168/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7037

Epoch 169/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.6854

Epoch 170/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6622

Epoch 171/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7095

Epoch 172/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.6643

Epoch 173/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6493

Epoch 174/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7029

Epoch 175/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6822

Epoch 176/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6608

Epoch 177/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7001

Epoch 178/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6585

Epoch 179/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.6293

Epoch 180/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 10s 10ms/step - loss: 0.6874

Epoch 181/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7237

Epoch 182/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6297

Epoch 183/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.6462

Epoch 184/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6545

Epoch 185/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.6101

Epoch 186/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6184

Epoch 187/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6304

Epoch 188/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.7979

Epoch 189/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6113

Epoch 190/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.6050

Epoch 191/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6132

Epoch 192/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.7227

Epoch 193/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.6218

Epoch 194/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6143

Epoch 195/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.5991

Epoch 196/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6099

Epoch 197/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.6203

Epoch 198/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.6039

Epoch 199/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 9ms/step - loss: 0.5957

Epoch 200/200

521/521 ━━━━━━━━━━━━━━━━━━━━ 5s 10ms/step - loss: 0.6121

<keras.src.callbacks.history.History at 0x790125ece0f0>

int_to_char = dict((i, c) for i, c in enumerate(chars))

# pick a random seed

start = np.random.randint(0, len(dataX)-1)

pattern = dataX[start]

print("Seed:")

print("\"", ''.join([int_to_char[value] for value in pattern]), "\"")

# generate characters

for i in range(1000):

x = np.reshape(pattern, (1, len(pattern), 1))

x = x / float(n_vocab)

prediction = model.predict(x, verbose=0)

index = np.argmax(prediction)

result = int_to_char[index]

seq_in = [int_to_char[value] for value in pattern]

sys.stdout.write(result)

pattern.append(index)

pattern = pattern[1:len(pattern)]

print("\nDone.")

Seed:

" nd it was told Laban on the third day that Jacob was fled.

"

44:2 And Jbcob gassrr ir the fiid of Edyaa whe wondo sfnha and ailrh yuaer,of his,sane. thyung, Whs ih boit, and,hn hl hnrde thie S miv seke thi tho cuonh.

4:: 9 di Nbcrllh hsn the creedrgn Hadhb'o sonsins in the eayte; 19:52 Aod he lan toto mhe sevt sherg oh she cattht, and whrt yhll Ioteph woy wate ueie io thi grrst, thtd gn

ttes thoe

ciree mh

33:24 And Ibcab shad unto Jaca, theee wsro them T ar the GORD ho mlcd Pia andu ho m

jnos thtu moattdnt thi wiol

fe thv whec. and whru bivse clsm mo mof vhath, and bevsedn ho tht ferd: and I cas dr fi wh,t yill

w haneh in teers. and whsu bilsn f fiost and coddd thto mis soon thet

yhenh ho cntnre thi eeaem oi

hif seren sekh?

2911 Ant see snea and sald

Ir marh dr the tooeg oh mha ywon ho thi land of his sone.

anr

whe flpane waa fedbn ie

the LORD with the sems of Nicn.o gonde. and snuo thed, and tayt mu butthe thte mo anc fs fo thel miyeeted

and thes cil that here tpe fay

31: A Ni Eva efter is thr glihm, and poltipd aro thee, and pe sea

Done.

The result is not what we expected mainly because of three resons:#

The model requires to be trained with a larger dataset in order to better capture the dynamics of the language.

During validation it is not recommendable to select the output with maximum probability but to use the output distribution as parameters to sample from a multinomial distribution. This avoid the model to get stuck in a loop.

A more flexible model with more data could get better results.

def sample(preds, temperature=1.0):

# helper function to sample an index from a probability array

preds = np.asarray(preds).astype('float64')

preds = np.log(preds) / temperature

exp_preds = np.exp(preds)

preds = exp_preds / np.sum(exp_preds)

probas = np.random.multinomial(1, preds, 1)

return np.argmax(probas)

Using a more complex model with the whole dataset#

This problem is complex computationally speaking, so the next model was run using GPU.

batch_size = 128

model = Sequential()

model.add(Input(shape=(X.shape[1], X.shape[2])))

model.add(LSTM(256, return_sequences=True))

model.add(Dropout(rate=0.2))

model.add(LSTM(256))

model.add(Dropout(rate=0.2))

model.add(Dense(y.shape[1], activation='softmax'))

model.compile(loss='categorical_crossentropy', optimizer='adam')

More data could produce memory erros so we have to create a data_generator function for the problem:

from tensorflow.keras.utils import to_categorical

class KerasBatchGenerator(object):

def __init__(self, data, num_steps, batch_size, char_to_int, n_vocab, skip_step=1):

self.data = data

self.num_steps = num_steps

self.batch_size = batch_size

self.char_to_int = char_to_int

self.n_vocab = n_vocab

self.current_idx = 0

self.skip_step = skip_step

def generate(self):

while True:

x = np.zeros((self.batch_size, self.num_steps, 1), dtype=np.float32)

y = np.zeros((self.batch_size, self.n_vocab), dtype=np.float32)

for i in range(self.batch_size):

if self.current_idx + self.num_steps >= len(self.data):

# reset the index back to the start of the data set

self.current_idx = 0

seq_in = self.data[self.current_idx:self.current_idx + self.num_steps]

x[i, :, 0] = np.array([self.char_to_int[char] for char in seq_in]) / float(self.n_vocab)

seq_out = self.data[self.current_idx + self.num_steps]

temp_y = self.char_to_int[seq_out]

# convert all of temp_y into a one hot representation

y[i, :] = to_categorical(temp_y, num_classes=self.n_vocab)

self.current_idx += self.skip_step

yield x, y

batch_size = 128

train_data_generator = KerasBatchGenerator(raw_text, seq_length, batch_size, char_to_int, n_vocab, skip_step=3)

model.fit(train_data_generator.generate(), epochs=30, steps_per_epoch=n_chars//batch_size)

#model.fit(X, y, epochs=200, batch_size=32)

Epoch 1/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 33s 21ms/step - loss: 2.4504

Epoch 2/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 2.2917

Epoch 3/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 2.2230

Epoch 4/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 2.1850

Epoch 5/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 2.1429

Epoch 6/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.9989

Epoch 7/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 2.0430

Epoch 8/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.9867

Epoch 9/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 20ms/step - loss: 1.8116

Epoch 10/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.8121

Epoch 11/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.8064

Epoch 12/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.8789

Epoch 13/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.7496

Epoch 14/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.8017

Epoch 15/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.8482

Epoch 16/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.6041

Epoch 17/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.6403

Epoch 18/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.6420

Epoch 19/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 20ms/step - loss: 1.7593

Epoch 20/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.5478

Epoch 21/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.7173

Epoch 22/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.8039

Epoch 23/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.5312

Epoch 24/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.5308

Epoch 25/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 33s 21ms/step - loss: 1.5187

Epoch 26/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.7089

Epoch 27/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.5227

Epoch 28/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.5929

Epoch 29/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.7020

Epoch 30/30

1562/1562 ━━━━━━━━━━━━━━━━━━━━ 32s 21ms/step - loss: 1.5079

<keras.src.callbacks.history.History at 0x79008c3bc530>

As we saw in previous classes, the model trained using batch_input_shape requires a similar batch for validation, so in order to evaluate the model using a single sequence, we have to create a new model with a batch_size = 1 and pass on the learnt weights of the first model to the new one.

# re-define the batch size

n_batch = 1

# re-define model

new_model = Sequential()

new_model.add(Input(shape=(X.shape[1], X.shape[2]),batch_size=n_batch))

new_model.add(LSTM(256, return_sequences=True))

new_model.add(Dropout(rate=0.2))

new_model.add(LSTM(256))

new_model.add(Dropout(rate=0.2))

new_model.add(Dense(y.shape[1], activation='softmax'))

# copy weights

old_weights = model.get_weights()

new_model.set_weights(old_weights)

# compile model

new_model.compile(loss='categorical_crossentropy', optimizer='adam')

# pick a random seed

start = np.random.randint(0, len(dataX)-1)

pattern = dataX[start]

print("Seed:")

print("\"", ''.join([int_to_char[value] for value in pattern]), "\"")

# generate characters

for i in range(1000):

x = np.reshape(pattern, (1, seq_length, 1))

x = x / float(n_vocab)

prediction = new_model.predict(x, verbose=0)[0]

index = sample(prediction, 0.3)

result = int_to_char[index]

seq_in = [int_to_char[value] for value in pattern]

sys.stdout.write(result)

pattern.append(index)

pattern = pattern[1:len(pattern)]

print("\nDone.")

Seed:

" the

youngest, and his corn money. And he did according to t "

he LORD shall be she poiest shat were dorrt and she LORD bome tnto the LORD.

22:18 These was the family of the LORD shall be spooe for a swoat so the children of Israel.

22:13 And the LORD said unto Moses, The LORD shall spread unto the LORD.

22:16 And the LORD said unto Moses, Ge thou shalt not be a servant offering of the camp of the camp.

25:10 And the LORD said unto Moses, Boe the seventh cay of the camp of the camt, the sons of the LORD shall be all the sabernacle of the LORD: and the LORD said unto Moses, Tat the LORD shall be a senvart of the service of the children of Israel.

22:19 And the LORD said unto Moses, Ge thou shalt sake the LORD, the sons of the LORD shall be a pamestard ay the family of the LORD.

23:14 And the LORD said unto Moses, The LORD said unto Moses, The LORD shall be the campelitt of the LORD, and the people of the family of the camp of the sabernacle of the LORD.

22:26 And the LORD said unto Moses and the son of Eanah, the son of Mamanieh, the son of

Done.

# serialize model to JSON

model_json = new_model.to_json()

with open("modelgenDNNLSTM.json", "w") as json_file:

json_file.write(model_json)

# serialize weights to HDF5

new_model.save_weights("modelgenDNNLSTM.weights.h5")

print("Saved model to disk")

Saved model to disk