03.03 - SVMs#

!wget --no-cache -O init.py -q https://raw.githubusercontent.com/rramosp/ai4eng.v1/main/content/init.py

import init; init.init(force_download=False); init.get_weblink()

from sklearn.datasets import *

import numpy as np

from local.lib import mlutils

from sklearn.tree import DecisionTreeClassifier

import matplotlib.pyplot as plt

%matplotlib inline

from IPython.display import Image

from mpl_toolkits.mplot3d import Axes3D

from sklearn.linear_model import LogisticRegression

from sklearn.datasets import make_circles

X,y = make_circles(200, noise=.05)

Feature transformation#

A linear classifier on higher dimensions#

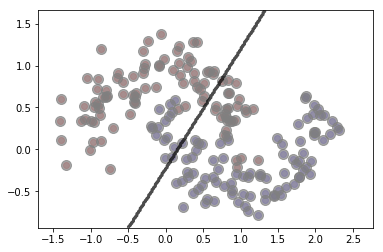

lr = LogisticRegression()

lr.fit(X,y)

print ("SCORE %.2f"%lr.score(X,y))

mlutils.plot_2Ddata(X, y, dots_alpha=.3)

mlutils.plot_2D_boundary(lr.predict, np.min(X, axis=0), np.max(X, axis=0),

line_width=3, line_alpha=.7, label=None)

SCORE 0.51

/opt/anaconda2/envs/p36/lib/python3.6/site-packages/sklearn/linear_model/logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

(0.514125, 0.485875)

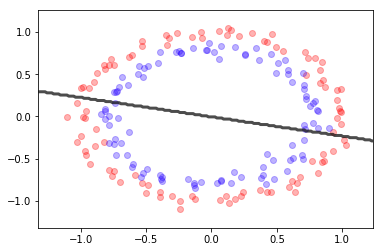

X,y = make_circles(300, noise=.05)

X = np.vstack((X.T,X[:,0]**2+X[:,1]**2)).T

X.shape

(300, 3)

fig = plt.figure(figsize=(8,6))

ax = fig.add_subplot(111, projection='3d')

ax.scatter(X[:,0][y==0], X[:,1][y==0],X[:,2][y==0], color="blue")

ax.scatter(X[:,0][y==1], X[:,1][y==1],X[:,2][y==1], color="red")

<mpl_toolkits.mplot3d.art3d.Path3DCollection at 0x7fa8b2663fd0>

lr = LogisticRegression()

lr.fit(X,y)

print ("SCORE %.2f"%lr.score(X,y))

SCORE 0.98

/opt/anaconda2/envs/p36/lib/python3.6/site-packages/sklearn/linear_model/logistic.py:433: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

Support vector machines#

observa que \(\gamma\) representa cuanto de cercanos han de estar dos puntos para considerarlos similares

## KEEPOUTPUT

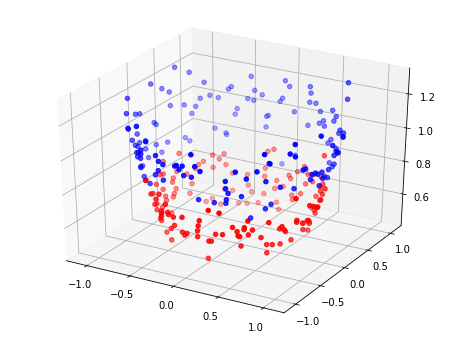

from sklearn.svm import SVC

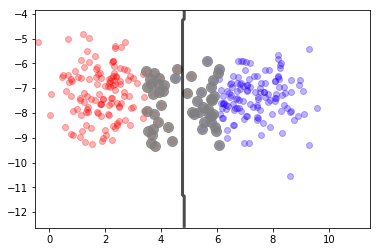

X,y = make_blobs(300, centers=2, cluster_std=1)

sv = SVC(gamma=1e-2)

sv.fit(X,y)

print (sv.score(X,y))

mlutils.plot_2Ddata(X, y, dots_alpha=.3)

mlutils.plot_2D_boundary(sv.predict, np.min(X, axis=0), np.max(X, axis=0),

line_width=3, line_alpha=.7, label=None)

plt.scatter(sv.support_vectors_[:,0], sv.support_vectors_[:,1], s=100, alpha=.8, color="gray")

print ("number of support vectors", len(sv.support_vectors_))

0.99

number of support vectors 50

## KEEPOUTPUT

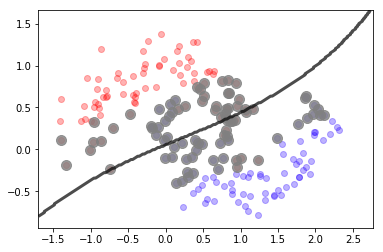

from sklearn.svm import SVC

X,y = make_moons(200, noise=0.2)

sv = SVC(gamma=.1)

sv.fit(X,y)

print (sv.score(X,y))

mlutils.plot_2Ddata(X, y, dots_alpha=.3)

mlutils.plot_2D_boundary(sv.predict, np.min(X, axis=0), np.max(X, axis=0),

line_width=3, line_alpha=.7, label=None)

plt.scatter(sv.support_vectors_[:,0], sv.support_vectors_[:,1], s=100, alpha=.8, color="gray")

0.88

<matplotlib.collections.PathCollection at 0x7ff51a2d75c0>

## KEEPOUTPUT

sv.support_vectors_.shape

(86, 2)

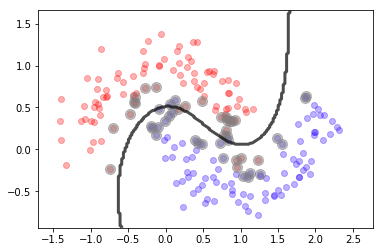

## KEEPOUTPUT

sv = SVC(gamma=1)

sv.fit(X,y)

print (sv.score(X,y))

mlutils.plot_2Ddata(X, y, dots_alpha=.3)

mlutils.plot_2D_boundary(sv.predict, np.min(X, axis=0), np.max(X, axis=0),

line_width=3, line_alpha=.7, label=None)

plt.scatter(sv.support_vectors_[:,0], sv.support_vectors_[:,1], s=100, alpha=.5, color="gray")

0.955

<matplotlib.collections.PathCollection at 0x7ff51a244630>

## KEEPOUTPUT

sv = SVC(gamma=1e-4)

sv.fit(X,y)

print (sv.score(X,y))

mlutils.plot_2Ddata(X, y, dots_alpha=.3)

mlutils.plot_2D_boundary(sv.predict, np.min(X, axis=0), np.max(X, axis=0),

line_width=3, line_alpha=.7, label=None)

plt.scatter(sv.support_vectors_[:,0], sv.support_vectors_[:,1], s=100, alpha=.7, color="gray")

0.79

<matplotlib.collections.PathCollection at 0x7ff51a2400b8>